Real-Time Multimodal Signal Processing

May 1, 2019

Overview

In this project, I developed a Python application to process multimodal signals (physiological with audiovisual), into SSI (Social Signal Interpretation) pipeline. In addition, a set of tools called emovoice was used in conjunction with the multimodal signals which built a real-time emotion recognizer based on acoustic properties of speech (not using word information). Sensors include the following:

- GSR (Galvanic Skin Response)

- Refers to changes in sweat gland activity that are reflective of the intensity of our emotional state, otherwise known as emotional arousal.

- PPG (Photoplethysmography LED Pulse Sensor)

- Uses a light-based technology to sense the rate of blood flow as controlled by the heart’s pumping action

- ECG (Electrocardiography)

- Measures the bio-potential generated by electrical signals that control the expansion and contraction of heart chambers

- EEG (Electroencephalography)

- An electrophysiological monitoring method to record electrical activity of the brain.

- EEG with PPG

- EEG with GSR

- EEG with ECG

- EEG with PPG, GSR

- EEG with ECG, GSR

- EEG with PPG, GSR

- EEG with PPG, GSR, and Audio-visual

Motivation

My Career goal in Robotics is to improve the lives of people, and a large part of that is figuring out how computers can reliably detect emotion. Many studies have been done on facial emotion recognition, yet more modalities should be explored, considering we, as humans express emotion in various ways. I believe one of those ways of vast importance is by means of physioligical signals, such as EEG (brain wave signals), ECG, and PPG (heart rate signals), in addition to combining these modalities with audiovisual cues and voice emotion detection. This project explores the initial phase of processing such signals real-time into a social signal processing pipipline (as part of the SSI social signal framework) to support future steps in fusing the signals to eventually detect one’s emotional state real-time. The fact that the signals are sent real-time has the potential to solve challenges in prior emotion detection algorithms which rely only on past data, and hence, lower reliability. Thus, I have hope for a future whereby robots can be capable of receiving these multimodal real-time signals and have the potential to save lives.

Demo videos:

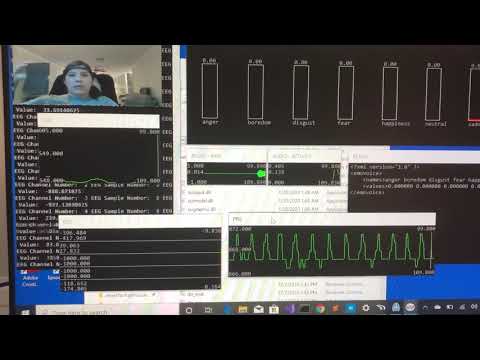

EEG with PPG, GSR AND AUDIOVISUAL, PLUS VOICE EMOTION DETECTION!

Detecting “disgust, sadness and boredom” in voice

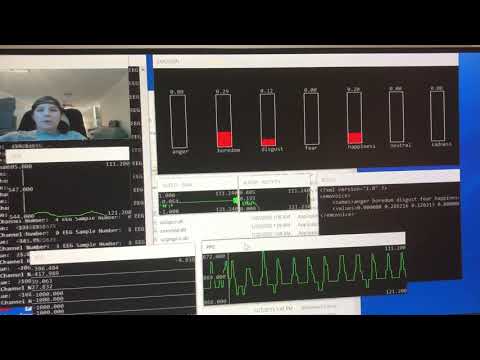

EEG with PPG, GSR AND AUDIOVISUAL, PLUS VOICE EMOTION DETECTION!

Detecting “happiness” in voice

EEG with PPG, GSR AND AUDIOVISUAL:

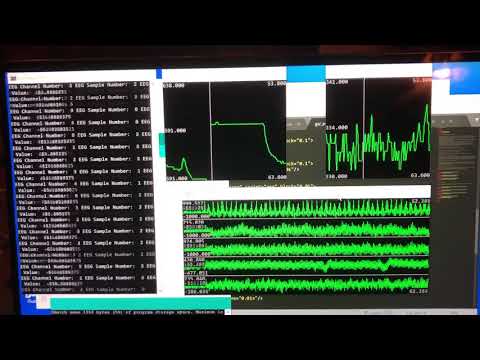

EEG with ECG, GSR: